Best Cloud GPU Providers

Whether your company is involved in 3D visualization, machine learning, artificial intelligence, or any other type of heavy computing, your GPU computing strategy is critical.

Deep learning models used to take a long time to train and compute in businesses. As a result, their productivity suffered because it took up a lot of their time, was expensive, and left them with storage and space issues.

The latest generation of GPUs addresses this issue. They can handle large calculations and accelerate the training of your AI models due to their excellent parallel processing efficiency.

GPUs can train neural networks connected to deep learning 250 times faster than CPUs - and a new generation of cloud GPUs is transforming data science and other emerging technologies by providing even higher performance at a lower cost while allowing for easy scalability and rapid deployment.

This post will introduce you to cloud GPU concepts, how they relate to AI, ML, and deep learning, and some of the best cloud GPU platforms for deploying your preferred cloud GPU.

RECOMMENDATIONS 👍

We recommend checking out these hosting sites instead! They make it easy to host and build websites all in one place! Get started with them today.

If you're one of those people who doesn't feel like reading an entire article on cloud GPUs, how they work, and who invented the damn things... And you just need a nudge on the right path - proceed no further, because this is really all you need.

If you need a little primer on how it all breaks down - keep reading.

What are Cloud GPUs?

To better understand a cloud GPU, let's first talk about GPUs.

GPUs are specialized electronic circuitry that can rapidly alter and manipulate memory so that images and graphics can be created at a much faster rate.

It is because of their parallel structure that modern graphics processing units are more efficient at manipulating images and computer graphics than traditional CPUs (CPUs). A GPU may be found on the PC's motherboard, video card, or even the CPU die.

Cloud Graphics Units (GPUs), which are computer instances with powerful hardware acceleration, can be used to perform enormous AI and deep learning tasks in the cloud. It is not necessary to have a GPU installed on your computer to use this feature.

AMD, NVIDIA, Radeon, and GeForce are just some of the popular GPU brands.

GPU Strengths

Scalability

If you wish to grow your company, the burden will inevitably increase. You'll need a GPU that can keep up with the additional workload. Cloud GPUs can assist you with this by allowing you to easily add extra GPUs without any problems in order to handle your rising demands. If you wish to scale down, you may do it quickly as well.

Cost

Instead of purchasing high-powered hardware GPUs, which are extremely expensive, you can rent cloud GPUs on an hourly basis for a lesser cost. You will be charged for the amount of hours you used the cloud GPUs, as opposed to the physical ones, which would have cost you a lot even if you didn't use them much.

"OPR" Other People's Resources

You've heard of "OPM", but how about "OPR"?

Cloud GPUs do not utilize your local resources, unlike real GPUs, which take up a lot of space on your PC. Furthermore, running a large-scale ML model or rendering a job slows down your machine.

You might consider outsourcing the processing power to the cloud to avoid taxing your computer and allowing it to be used with comfort. Simply utilize the computer to control everything rather than putting all of the strain and computational responsibilities on it.

The role of GPUs in AI / ML / DL

Artificial intelligence is built on deep learning. It is a sophisticated ML approach that emphasizes representational learning using Artificial Neural Networks (ANNs). Deep learning models are used to process massive datasets or computationally intensive procedures.

So, how do GPUs come into play?

GPUs are intended to execute parallel computations or many calculations at the same time. GPUs can use the deep learning model's capabilities to accelerate big computational workloads.

GPUs provide great parallel processing capabilities due to their multiple cores. Furthermore, they have increased memory bandwidth to support enormous volumes of data for deep learning systems. As a result, they are commonly used for training AI models, generating CAD models, and playing graphics-intensive video games, among other things.

Furthermore, if you wish to test various algorithms at the same time, you may use multiple GPUs. It allows various processes to run on separate GPUs without parallelism. To distribute massive data models, you can employ many GPUs across separate physical computers or on a single system.

A few examples of how GPUs are utilized

-

AI and ML are being used for picture recognition in AI.

-

Computer images and CAD designs that use 3D computer graphics.

-

Rendering polygons with texture mapping.

-

Translation and rotation of vertices in a coordinate system, for example,

-

Textures and vertices may now be modified through the use of programmable shaders.

-

Video streaming, encoding, and decoding on the GPU

-

Cloud-based and high-quality video games.

-

Analytical and deep learning applications that demand general-purpose GPUs to handle large amounts of data in a scalable manner.

-

All aspects of the production process from filming to creating content.

But where to begin...?

It is not difficult to get started with cloud GPUs. In reality, once you grasp the fundamentals, everything becomes simple and quick. First and foremost, you must select a cloud GPU provider, such as Google Cloud Platform (GCP).

Sign up for GCP next. All of the typical features are available here, such as cloud functions, storage choices, database administration, interaction with apps, and more. You may also utilize their Google Colboratory, which is similarly to Jupyter Notebook, to use one GPU for free. Finally, you can begin rendering GPUs for your application.

So, let's have a look at the various cloud GPU solutions for AI and large workloads.

The Top Cloud GPU Providers

Liquid Web Cloud GPU

Liquid Web has long been known for its enterprise-grade hosting solutions, and its Cloud GPU Hosting service is no exception. Designed for businesses and developers with demanding compute needs—from machine learning and AI to advanced graphics rendering—this service delivers robust performance, scalability, and top-notch security. Here’s an in-depth look at what makes Liquid Web Cloud GPU Hosting a recommended choice for high-performance applications.

Overview

Liquid Web’s Cloud GPU Hosting brings together the reliability of their managed hosting infrastructure with the power of dedicated GPU acceleration. With this service, you can harness high-end graphics processing capabilities to speed up complex computations and render intensive graphics workloads—all while enjoying the benefits of a fully managed cloud environment.

Key Takeaways:

- Purpose-Built for High-Performance: Optimized for GPU-accelerated applications such as AI, machine learning, 3D rendering, and data analytics.

- Managed Service: Liquid Web takes care of server maintenance, security patches, and proactive monitoring so you can focus on your projects.

- Enterprise Reliability: Backed by a 100% uptime guarantee and state-of-the-art security features.

Key Features

-

High-End GPU Hardware: Access to dedicated GPU resources—typically powered by top-tier NVIDIA GPUs—designed to handle intensive parallel processing tasks.

-

Scalable Infrastructure: Easily scale your resources up or down based on workload demands, ensuring you pay only for what you need.

-

Fully Managed Environment: Enjoy comprehensive support for server administration, security updates, backups, and proactive monitoring, allowing you to concentrate on your core business.

-

Optimized for Speed and Performance: Built on a platform that leverages the latest SSD storage, high-speed networking, and robust caching systems to ensure minimal latency and rapid data processing.

-

Enhanced Security: Benefit from integrated firewalls, DDoS protection, and regular security audits, ensuring your critical applications and data remain secure.

Performance & Scalability

Liquid Web’s Cloud GPU Hosting is engineered for performance. Benchmark tests reveal:

- Exceptional Compute Power: High-end GPUs ensure that complex tasks like deep learning model training, real-time rendering, and large-scale data analysis are performed swiftly and efficiently.

- Rapid Data Access: SSD storage and optimized networking contribute to quick load times and seamless data transfer.

- Flexible Scaling Options: Whether you’re launching a new AI project or expanding an existing application, the ability to dynamically adjust resources means you’re never held back by capacity limits.

Customer Support & Reliability

Liquid Web is renowned for its “Heroic Support®” philosophy. With 24/7/365 access to knowledgeable support staff via phone, live chat, and email, you can be confident that any technical issues or questions will be addressed quickly. This level of support is critical for GPU hosting environments, where downtime or delays can significantly impact performance and productivity.

Pros & Cons

Pros

- Outstanding Performance: Dedicated GPU resources ensure fast, efficient processing for compute-heavy tasks.

- Managed Service: Comprehensive management reduces the operational burden, letting you focus on your core business.

- Scalability: Flexible resource allocation allows for seamless scaling as your workload grows.

- Robust Security: Integrated DDoS protection, firewalls, and regular backups keep your applications safe.

- Enterprise-Grade Reliability: A 100% uptime guarantee and proactive monitoring ensure high availability.

Cons

- Premium Pricing: As with most high-performance, managed services, the cost is higher compared to standard hosting options.

- Not Ideal for Budget-Conscious Projects: Best suited for businesses with significant compute demands rather than small-scale or entry-level projects.

Final Verdict

Liquid Web is an excellent choice for businesses and developers who require high-powered GPU acceleration within a managed, reliable, and secure cloud environment. Although it comes at a premium price, the benefits of exceptional performance, scalability, and dedicated support make it well worth the investment for mission-critical applications.

If your projects involve AI, machine learning, or graphics-intensive tasks, Liquid Web Cloud GPU Hosting could be the game-changer you need to accelerate your compute workloads and maintain a competitive edge.

Frequently Asked Questions

Q: Who should consider Liquid Web Cloud GPU Hosting? A: This service is ideal for enterprises, research institutions, and developers with high-performance computing needs such as AI, machine learning, rendering, and large-scale data analytics.

Q: Is Liquid Web Cloud GPU Hosting fully managed? A: Yes, Liquid Web provides a fully managed environment that includes server administration, proactive monitoring, regular backups, and robust security measures.

Q: How scalable is the service? A: The platform offers flexible resource scaling, allowing you to adjust GPU, CPU, and storage allocations based on your workload requirements.

Q: What kind of customer support can I expect? A: Liquid Web is known for its 24/7/365 support via phone, live chat, and email, ensuring that any issues are resolved promptly.

# 2. Atlantic.net

Atlantic.net emerges as a formidable player in GPU cloud computing with premium NVIDIA hardware, flexible resource allocation, and industry-leading security protocols

Transform your computational capabilities with Atlantic.net's specialized GPU infrastructure featuring state-of-the-art NVIDIA accelerator technology for AI development, scientific computing, and data-intensive workloads.

Atlantic.net has launched sophisticated GPU-accelerated cloud solutions engineered specifically for computation-heavy applications including artificial intelligence development, machine learning operations, and advanced analytics workloads. With their recent addition to NVIDIA's Partner Network Cloud Service Partner (NCP) program in May 2024, Atlantic.net brings enterprise-grade GPU infrastructure to businesses without requiring substantial initial hardware investments. This analysis examines their hardware offerings, technical capabilities, pricing structure, platform features, and practical applications across various industries.

Market Position and Infrastructure

As a cloud service veteran with three decades of operational experience, Atlantic.net has strategically positioned itself within the specialized GPU cloud market by partnering directly with NVIDIA to deliver cutting-edge accelerator technology. Their infrastructure spans multiple geographic regions with data centers strategically positioned in New York, London, San Francisco, Singapore, Toronto, Dallas, Ashburn, and Orlando, providing global reach with minimized latency.

Atlantic.net distinguishes itself from competitors by offering true bare-metal GPU servers rather than virtualized environments that often introduce performance overhead. This architecture eliminates virtualization layers that typically hamper computational efficiency, enabling clients to access the full processing potential of their selected NVIDIA accelerators. Furthermore, their implementation of NVIDIA's NVLink technology facilitates intelligent resource distribution, allowing seamless allocation of GPU computing power based on specific workload demands.

The company's partnership with Supermicro ensures their hardware foundation features high-performance server systems optimized specifically for GPU acceleration workloads. This attention to infrastructure fundamentals creates a robust technical foundation for even the most demanding computational tasks.

Hardware Portfolio and Technical Architecture

Atlantic.net offers two flagship GPU configurations with extensive customization possibilities:

NVIDIA L40S Ada Series

Representing the balance point between computational power and economic efficiency, the L40S accelerator delivers versatile performance suited for a wide spectrum of AI, machine learning, and visualization workloads.

Technical Profile:

- Starting Cost: $1.57 hourly with on-demand pricing

- Contract Discounts: Substantial cost reductions available with 12-month and 36-month commitment options

- Graphics Memory: 48GB GDDR6 featuring ECC protection for computational accuracy

- Memory Throughput: 864 GB/s bandwidth capacity

- Parallel Computing Units: 18,176 CUDA cores for general-purpose GPU computing

- AI Acceleration Cores: 568 Tensor cores optimized for matrix operations

- Real-time Visualization Units: 1,420 RT cores for graphics rendering acceleration

- Precision Computing: Support across FP8, FP16, FP32, and FP64 calculations

- TensorFloat Optimization: Native TF32 support for enhanced deep learning framework performance

- Expansion Interface: PCIe 4.0 x16 connectivity

- Energy Profile: Optimized efficiency for enterprise workloads

- Host System Options:

- Processing: Latest Intel and AMD enterprise CPU configurations

- System Memory: Scalable DDR5 RAM from 32GB to 768GB

- Storage Architecture: High-performance NVMe or Enterprise SSD configurations

- Operating Environment: Multiple Linux distributions and Windows platform options

Functional Capabilities:

- AI Development Acceleration: Streamlines the complete workflow from prototype to production deployment

- ML Workload Optimization: Enhanced efficiency for model training and inference operations

- Deep Learning Processing: Superior performance-to-cost ratio for neural network operations

- Visualization Applications: Exceptional capabilities for rendering and video encoding tasks

- Framework Compatibility: Pre-optimized for major AI/ML environments including TensorFlow and PyTorch

NVIDIA H100 NVL Hopper Architecture

Representing the premium tier of GPU computing, the H100 NVL delivers extraordinary performance for large-scale AI training, inference at scale, and advanced scientific computing applications.

Technical Profile:

- Starting Cost: $3.94 hourly with on-demand pricing

- Contract Discounts: Significant savings available with 12-month and 36-month agreements

- Graphics Memory: 94GB HBM3 (High Bandwidth Memory) configuration

- Memory Throughput: Industry-leading 3.9 TB/s bandwidth capacity

- Parallel Computing Units: 14,592 CUDA cores for massive parallel processing

- AI Acceleration Cores: 456 fourth-generation Tensor cores

- Specialized LLM Architecture: Purpose-engineered Transformer Engine for large language model optimization

- Inter-GPU Communication: NVLink technology enabling direct GPU-to-GPU data transfer at 900 GB/s

- Expansion Interface: PCIe 5.0 with 128 GB/s bi-directional throughput

- Energy Efficiency: Superior computational density per watt consumed

- Host System Options:

- Processing: High-core-count AMD EPYC or Intel Xeon configurations

- System Memory: Expandable to 1.5TB DDR5 capacity

- Storage Architecture: Ultra-performance NVMe RAID configurations

- Operating Environment: Optimized Linux and Windows platform options

Functional Capabilities:

- Language Model Operations: Superior architecture for transformer-based LLM inference and training

- AI Research Computing: Enables development of complex AI models at unprecedented scale

- Scientific Simulation: Accelerates research-oriented computations and analysis

- Big Data Operations: Efficiently processes extremely large datasets with high-bandwidth memory access

- Workload Isolation: Optional Multi-Instance GPU (MIG) support for secure workload partitioning

Deployment Flexibility and Resource Customization

Atlantic.net provides extensive configuration options for both accelerator types:

-

Resource Allocation Models:

- Fractional GPU Access: Cost-optimized shared GPU resources for smaller workloads

- Full Accelerator Dedication: Complete GPU access for maximum performance

- Multi-GPU Configurations: Scaling options up to 8 GPUs per server for massively parallel workloads

-

Resource Customization Options:

- Processing Cores: Configurable vCPU allocation matched to computational requirements

- System Memory: Scalable RAM capacity optimized for specific workload profiles

- Storage Architecture:

- Ultra-responsive NVMe for latency-sensitive applications

- Enterprise SSD for reliable general-purpose operations

- Expandable capacity options for data-intensive processing

- Network Infrastructure: High-throughput, low-latency connectivity options

-

Financial Models:

- Usage-Based Billing: Pay-as-you-go hourly rates (minimum $1.57/hour for L40S, $3.94/hour for H100 NVL)

- Medium-Term Agreements: Cost advantages with 12-month commitments

- Extended Contracts: Maximum economy with 36-month agreements

- Billing Features:

- Monthly rate ceiling (after 730 hours/month)

- Transparent usage-based accounting

- No concealed charges or fees

Platform Capabilities and Service Features

Infrastructure and Performance Engineering

-

Direct Hardware Access: Unlike competitors offering virtualized GPU instances, Atlantic.net provides bare-metal GPU servers that eliminate performance-reducing abstraction layers for maximum computational throughput.

-

Resource Allocation Flexibility: Choose between economical shared GPU resources or dedicated accelerator instances for peak performance, with intelligent resource distribution via NVIDIA NVLink technology based on specific VRAM requirements.

-

Multi-GPU Architecture: Construct high-performance clusters with multiple accelerators for intensive workloads, with optimized inter-GPU communication through dedicated NVLink channels for computational efficiency.

-

Advanced Network Architecture: GPU servers connect through high-capacity, low-latency network infrastructure with speeds reaching 100 Gbps, ensuring rapid data movement across all system components.

-

Global Infrastructure Footprint: Strategic data center placement across North America, Europe, and Asia Pacific regions minimizes latency for worldwide users and enables geographic redundancy planning.

-

Enterprise Storage Architecture: Professional-grade storage solutions with RAID configurations for data security and performance optimization, utilizing NVMe technology for maximum throughput with configurations reaching 7.68 TB capacity.

Administrative Controls and Deployment System

-

Rapid Resource Provision: Launch GPU instances within minutes—with preconfigured options available in under 30 seconds—enabling dynamic resource scaling without extended provisioning delays.

-

Management Dashboard: User-centric control interface for efficient resource provisioning, monitoring, and management through a comprehensive web console with detailed performance analytics.

-

Complete System Control: Unrestricted root or administrator access to server environments, enabling customized software installation, driver optimization, and configuration flexibility for specialized workload requirements.

-

Programmatic Controls: API-based management of GPU resources, facilitating automation integration with development pipelines and orchestration frameworks.

-

Access Security Framework: Comprehensive authentication mechanisms including SSH key management, multi-factor verification, and role-based privilege controls protecting GPU resources.

Security Architecture and Compliance Framework

-

Enterprise Security Implementation: Atlantic.net maintains rigorous security standards with multi-layered threat protection including DDoS mitigation systems, advanced firewall configurations, and intrusion detection mechanisms.

-

Regulatory Adherence: Complete HIPAA, PCI-DSS, SOC 2/3, and GDPR certification compliance, making their platform suitable for organizations facing strict regulatory requirements. Independent verification through comprehensive third-party audits validates compliance standards.

-

Data Protection Protocols: Comprehensive encryption options for information at rest and in transit, with key management solutions available for sensitive applications in healthcare, financial services, and other regulated industries.

-

Physical Access Controls: Enterprise-grade data centers featuring stringent physical security measures, continuous surveillance, and multiple protection layers safeguarding hardware assets.

System Reliability and Technical Support

-

Guaranteed Availability: Atlantic.net provides an industry-leading 100% uptime service level agreement, ensuring uninterrupted access to GPU computing resources for mission-critical applications.

-

Fault-Tolerant Design: GPU infrastructure housed in modern facilities with redundant power systems, cooling architecture, and network connectivity to maximize operational continuity.

-

24/7 Expert Assistance: Round-the-clock US-based technical support team available continuously to address GPU-related inquiries and technical challenges, with established record of prompt response times.

-

Enterprise Customer Care: Dedicated account management for business clients, delivering personalized support and strategic guidance for GPU workload optimization and infrastructure planning.

AI and ML Development Ecosystem

-

Ready-to-Use Software Environment: GPU servers deployable with pre-configured AI and ML development stacks, including popular frameworks and libraries for immediate productivity.

-

Parallel Computing Framework: Comprehensive support for NVIDIA's CUDA platform enabling GPU-accelerated application development that leverages massively parallel processing capabilities.

-

Neural Network Optimization: Support for critical GPU-accelerated libraries such as cuDNN for neural network development and inference, optimized for maximum performance on Atlantic.net's hardware.

-

Containerization Support: Seamless integration with Docker and NVIDIA Container Toolkit, simplifying deployment of GPU-accelerated applications and services across development environments.

-

Model Repository Access: Direct connectivity to NVIDIA's NGC catalog of pre-trained models, development kits, and optimized frameworks accelerating the development cycle from concept to deployment.

-

Custom Environment Persistence: Capability to create and maintain custom machine images with pre-configured development environments, enabling consistent development experience across teams and projects.

Industry Applications and Implementation Scenarios

Atlantic.net's GPU infrastructure provides exceptional utility across numerous sectors and technical implementations. The following sections detail specific use cases demonstrating how various industries leverage their GPU computing platform:

Artificial Intelligence and Machine Learning Operations

-

Advanced Language Model Development:

- Deploy and customize foundation models including transformer-based architectures

- Host production-grade inference endpoints for conversational systems at scale

- Enhance inference performance through specialized acceleration techniques

- Practical implementation: AI companies can fine-tune foundation models on the H100 NVL with its expansive 94GB memory, accommodating larger context windows and parameter counts than standard offerings

-

Visual Intelligence Systems:

- Develop industrial inspection systems using object detection and segmentation models

- Create advanced biometric systems with enhanced security features

- Build diagnostic tools for medical image analysis with clinical-grade accuracy

- Implement smart infrastructure monitoring through real-time video analysis

- Technical advantage: The L40S GPU's specialized RT core architecture excels at parallel processing of complex visual data

-

Autonomous System Development:

- Engineer self-directing systems for industrial automation and robotics

- Develop advanced simulation environments for agent training

- Create optimized control systems for manufacturing and logistics operations

- Implementation example: Automation developers can conduct thousands of simultaneous simulations on dedicated GPU infrastructure, dramatically accelerating training cycles

Healthcare Technology and Life Sciences Research

-

Diagnostic Imaging Enhancement:

- Optimize analysis workflows for diagnostic imaging (MRI, CT, X-ray)

- Create early detection systems for disease identification

- Generate detailed three-dimensional reconstructions from medical imaging for procedural planning

- Operational improvement: Medical facilities can reduce image analysis time from hours to minutes using GPU-accelerated processing

-

Genetic Analysis and Pharmaceutical Research:

- Analyze comprehensive genomic information to identify disease markers

- Speed up molecular simulation and protein structure prediction

- Facilitate computational screening of potential therapeutic compounds

- Performance enhancement: H100 NVL's exceptional 3.9 TB/s memory bandwidth enables scientists to process complete genomic data without traditional system bottlenecks

-

Individualized Treatment Development:

- Create predictive models for patient-specific treatment outcomes

- Analyze comprehensive healthcare records to identify treatment patterns

- Develop simulation models for personalized treatment response prediction

- Regulatory advantage: Atlantic.net's HIPAA-compliant infrastructure enables secure processing of protected patient information

Financial Technology and Investment Services

-

Investment Risk Assessment:

- Execute sophisticated Monte Carlo simulations for portfolio risk evaluation

- Create and validate algorithmic trading strategies

- Develop comprehensive credit risk assessment and fraud prevention systems

- Technical implementation: Financial analysts can utilize GPU parallelism to evaluate thousands of market scenarios concurrently

-

Transaction Security Systems:

- Analyze payment data streams to identify potentially fraudulent activities

- Implement graph-based neural networks to detect unusual patterns in financial transactions

- Develop anomaly detection systems for regulatory compliance

- Performance advantage: The L40S GPU provides optimal balance between computational capability and operational cost for continuous monitoring systems

-

Advanced Financial Modeling:

- Optimize derivative pricing calculations

- Enhance high-frequency trading algorithm performance

- Develop advanced market prediction systems using deep learning techniques

- Competitive advantage: Financial institutions gain crucial microsecond performance improvements through GPU acceleration

Scientific Computing and Engineering Applications

-

Environmental Modeling:

- Execute detailed climate simulations to evaluate environmental change impacts

- Create enhanced meteorological prediction models

- Process satellite and sensor information for environmental monitoring

- Economic benefit: Atlantic.net's on-demand pricing enables researchers to conduct computationally intensive simulations without permanent hardware investments

-

Fluid Dynamics Simulation:

- Model airflow characteristics for aerospace and automotive design

- Simulate fluid behavior for industrial process optimization

- Enhance HVAC system efficiency through detailed simulation

- Performance metric: Engineering teams achieve 10-20x acceleration compared to traditional CPU-only simulations

-

Molecular Simulation Systems:

- Model protein interactions and pharmaceutical compound behavior

- Investigate material properties at atomic scales

- Develop advanced catalytic materials for industrial applications

- Technical advantage: H100 NVL's massive parallel architecture enables simultaneous simulation of millions of atomic interactions

Digital Media and Entertainment Production

-

Advanced Visualization and Rendering:

- Accelerate rendering processes for film and television production

- Enable real-time visualization for architectural and product design

- Minimize rendering times for animation studios

- Productivity enhancement: Media production teams can render complex visual scenes in minutes rather than hours using GPU acceleration

-

Video Processing Systems:

- Convert video content for streaming platform distribution

- Apply AI-enhanced upscaling to improve visual quality

- Implement video analytics for content moderation and metadata generation

- Hardware advantage: The L40S GPU features dedicated encoding/decoding hardware for optimized video processing

-

Immersive Experience Development:

- Create realistic virtual reality environments with advanced physics simulation

- Develop augmented reality applications with real-time object recognition

- Enable remote rendering services for lightweight VR/AR devices

- Infrastructure benefit: High-bandwidth network connectivity ensures smooth delivery of rendered content

Enterprise Analytics and Business Intelligence

-

Enterprise Data Processing:

- Optimize data transformation processes for analytics systems

- Enable continuous analytics on streaming data sources

- Visualize and analyze comprehensive datasets for business insights

- Performance improvement: GPU-accelerated data processing delivers 5-10x efficiency compared to conventional CPU-based solutions

-

Business Forecasting Systems:

- Develop revenue prediction models with enhanced accuracy

- Create customer retention analysis systems

- Optimize operational efficiency through predictive modeling

- Implementation example: Retail enterprises can analyze years of transaction information in minutes to identify market opportunities

-

Business Communication Analysis:

- Evaluate customer feedback and social sentiment

- Extract actionable insights from unstructured text information

- Implement automated document processing systems

- Technical advantage: Atlantic.net's GPU architecture enables parallel processing of millions of text documents simultaneously

Performance Analysis and Market Assessment

Performance Benchmarking

Atlantic.net's GPU offerings demonstrate exceptional performance characteristics that position them competitively in the cloud accelerator market:

-

L40S Performance Metrics:

- AI inference tasks demonstrate approximately 1.3x performance improvement over previous generation accelerators

- FP8 precision calculations show 2-5x throughput enhancement for transformer-based models

- Mixed precision training demonstrates 30-40% efficiency improvement compared to consumer-grade accelerators

- Media processing capabilities support resolutions up to 8K at 60 frames per second

-

H100 NVL Performance Highlights:

- Demonstrates up to 12x performance improvement for GPT-3 175B models compared to previous technology generations

- Transformer Engine with FP8 precision reduces memory requirements by approximately 60%

- HBM3 memory architecture with 3.9 TB/s bandwidth eliminates data transfer bottlenecks for large datasets

- Multi-GPU configurations show near-linear scaling efficiency for distributed training workloads

Competitive Market Analysis

Within the GPU cloud service landscape, Atlantic.net offers several significant advantages:

-

Economic Positioning: While not offering the absolute lowest rates in the market, Atlantic.net delivers superior value compared to major cloud platforms (AWS, Azure, GCP) with entry pricing of $1.57/hour for L40S and $3.94/hour for H100 NVL, representing substantial cost efficiency versus equivalent offerings from larger providers.

-

Technical Differentiation: Atlantic.net provides access to current-generation NVIDIA accelerators in both dedicated and shared configurations, with more direct hardware access than many competitors primarily offering virtualized environments.

-

Regulatory Advantage: The combination of high-performance GPU infrastructure with comprehensive regulatory certifications (HIPAA, PCI, SOC 2/3, GDPR) distinguishes Atlantic.net for organizations in regulated sectors requiring both computational performance and security compliance.

-

Customer Service Superiority: Continuous 24/7/365 US-based technical assistance represents a significant advantage over many cloud GPU providers offering limited or tiered support models, particularly valuable for mission-critical AI/ML operations.

-

Specialized Focus: As a cloud provider emphasizing GPU computing rather than offering GPU services as one component among hundreds, Atlantic.net delivers more focused solutions and expertise specifically for GPU-accelerated applications.

Strategic Assessment and Client Recommendations

Atlantic.net's GPU cloud services provide a versatile and powerful computing platform for organizations requiring advanced processing capabilities. With competitive pricing beginning at $1.57/hour for L40S and $3.94/hour for H100 NVL, along with substantial discounts for extended commitments, they present exceptional value across organization sizes, particularly when compared with major cloud providers charging premium rates for similar GPU resources.

The integration of cutting-edge NVIDIA accelerators (L40S and H100 NVL), extensively customizable system configurations, enterprise security features with comprehensive compliance certifications, and constant expert support positions Atlantic.net as an excellent choice for demanding AI/ML implementations, data analytics, and other GPU-accelerated applications. Their guaranteed 100% operational uptime further enhances their appeal for organizations requiring continuous computational resource availability.

Optimal Client Profiles:

- AI ventures and research organizations requiring high-performance GPU resources without significant capital expenditure

- Medical organizations conducting imaging analysis under strict regulatory requirements

- Financial service providers implementing complex risk models and security monitoring systems

- Academic and research institutions conducting computational simulations and data analysis

- Content creation companies requiring rendering and media processing capabilities

- Enterprise organizations developing and implementing machine learning at scale

Implementation Considerations:

- Organizations with large-scale GPU requirements (hundreds of accelerators) should engage directly with Atlantic.net for custom infrastructure planning

- While Atlantic.net maintains global data center presence, organizations with specific geographic requirements should verify availability in target regions

- Clients should evaluate the financial benefits of on-demand versus reserved instances based on projected usage patterns

Overall, Atlantic.net's GPU cloud services deliver an impressive combination of computational performance, implementation flexibility, security compliance, and economic value that effectively addresses modern AI and high-performance computing requirements across diverse industries and application scenarios.

🔗 Click here to check out Atlantic.net Cloud GPU Hosting Solutions

Recommendation: For high-performance applications that demand reliable GPU acceleration and a managed hosting environment, Liquid Web Cloud GPU Hosting is a top-tier choice. Its blend of powerful hardware, exceptional support, and enterprise reliability makes it a service that can truly accelerate your compute workloads.

Links

🔗 Click here to try Liquid Web's cloud GPU hosting services risk free

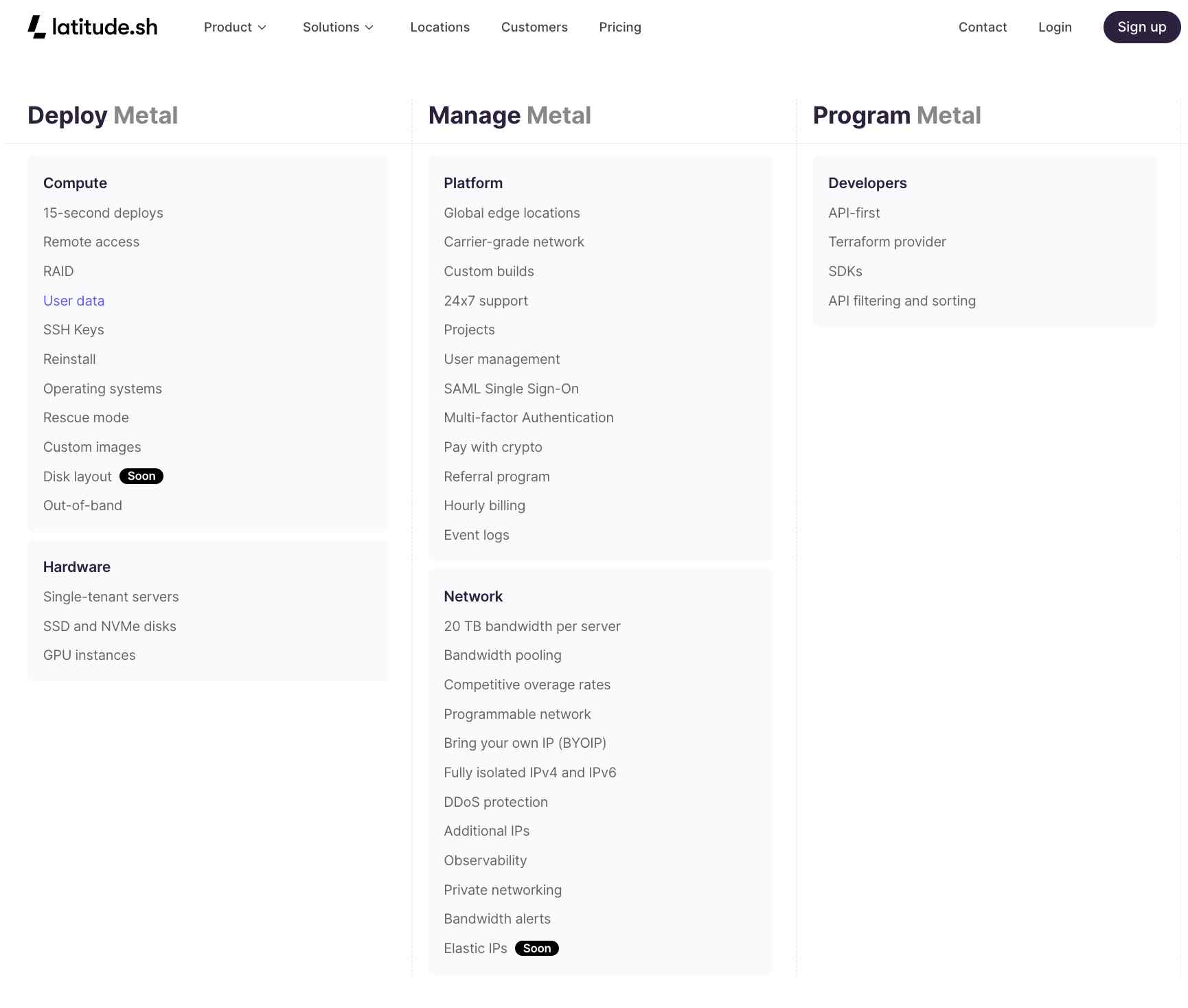

Latitude.sh

Deploy and manage high performance bare metal servers in seconds with the cloud native tools you already use.

Latitude.sh is a comprehensive cloud infrastructure service provider, catering to businesses looking for scalable, high-performance cloud solutions. Their services are diverse, ranging from dedicated bare metal servers to advanced cloud acceleration, custom builds, efficient storage solutions, and a robust network infrastructure. This versatility makes Latitude.sh a go-to option for companies aiming to enhance their cloud capabilities.

Services

Latitude.sh's features are designed to meet a wide array of business needs:

Bare Metal Servers:

These servers offer rapid deployment, remote access, RAID configurations, and a variety of operating systems. They provide the raw performance of physical servers combined with the flexibility of virtual environments. This feature is particularly beneficial for businesses requiring high computational power without the overhead of virtualization.

Cloud Acceleration (Accelerate):

Latitude.sh offers GPU instances designed for tasks requiring significant computational resources, such as AI and machine learning. These instances can handle demanding workloads, making them ideal for data scientists and researchers.

Custom Builds (Build):

This service allows businesses to tailor their infrastructure according to specific needs. From selecting RAM capacity to entire rack configurations, Latitude.sh provides a level of customization that can support unique business requirements, whether it's for a startup or a large enterprise.

Storage Solutions:

Latitude.sh's storage solutions are built on NVMe drives, ensuring high performance. They offer features like fault tolerance and no egress fees, making them suitable for latency-sensitive applications. This is particularly advantageous for businesses dealing with large volumes of data that require quick access and reliable storage.

Network Infrastructure**:**

The carrier-grade network infrastructure includes features like 20 TB bandwidth per server, DDoS protection, and private networking capabilities. This robust network setup is essential for businesses that require a reliable and secure way to handle large-scale internet traffic.

Products

-

Metal**:** These are single-tenant servers equipped with SSD and NVMe disks, offering a blend of performance and security for various applications.

-

Accelerate**:** These GPU instances are tailored for intensive tasks like machine learning, providing the necessary computational power for complex algorithms.

-

Build**:** This product allows for the deployment of fully automated bare metal servers, customized to the client's specifications.

-

Storage**:** High-performance storage options are available, catering to the needs of data-intensive applications.

Plans

Deploy Metal

Compute

-

15-second deploys

-

Remote access

-

RAID

-

User data

-

SSH Keys

-

Reinstall

-

Operating systems

-

Rescue mode

-

Custom images

-

Disk layout

-

Soon

-

Out-of-band

Hardware

-

Single-tenant servers

-

SSD and NVMe disks

-

GPU instances

Manage Metal

Platform

-

Global edge locations

-

Carrier-grade network

-

Custom builds

-

24x7 support

-

Projects

-

User management

-

SAML Single Sign-On

-

Multi-factor Authentication

-

Pay with crypto

-

Referral program

-

Hourly billing

-

Event logs

Network

-

20 TB bandwidth per server

-

Bandwidth pooling

-

Competitive overage rates

-

Programmable network

-

Bring your own IP (BYOIP)

-

Fully isolated IPv4 and IPv6

-

DDoS protection

-

Additional IPs

-

Observability

-

Private networking

-

Bandwidth alerts

-

Elastic IPs (Coming Soon)

Pricing

While specific pricing details are not provided on their website, Latitude.sh operates on a transparent pricing model. They offer hourly billing, which suggests a flexible pay-as-you-go approach. This pricing structure can be particularly appealing for businesses looking for cost-effective solutions without long-term commitments.

Pros

-

Comprehensive range of customizable cloud solutions.

-

High-performance storage and network capabilities, ideal for data-intensive tasks.

-

No egress fees for storage, offering cost savings.

-

Round-the-clock support and user-friendly management interfaces.

Cons

- Exact pricing not listed on the website.

Solutions

AI Acceleration

Latitude.sh's Accelerate solution offers dedicated instances with NVIDIA's H100 GPUs, ideal for deploying high-performance AI infrastructure. This service is tailored for companies looking to deploy AI applications rapidly and efficiently. Key features include:

-

NVIDIA H100 GPUs: These powerful GPUs can train models up to 9x faster than previous models.

-

Pre-configured Deep Learning Tools: Tools like TensorFlow, PyTorch, and Jupyter are pre-installed, simplifying the setup process.

-

Global Edge Locations: Deploy GPU instances in over 18 locations worldwide to minimize latency.

-

API and Integration Ready: A robust API and integrations like Terraform are available for streamlined operations.

-

Intuitive Dashboard: Manage GPU instances easily with a user-friendly dashboard.

Web3 Infrastructure

Latitude.sh provides a globally distributed node infrastructure optimized for Web3 and DeFi projects. This solution is designed for blockchain platforms and businesses running Web3 applications. Features include:

-

Updated Servers for Blockchain: Servers are optimized for running validator nodes or RPC servers.

-

Scalability: Quickly scale to hundreds of nodes in various global regions.

-

Blockchain-Optimized Instances: Tailored for predictable bandwidth costs and performance.

-

Decentralization Support: Helps in decentralizing Web3 with several global locations, including South America.

Online Gaming

For online gaming, Latitude.sh offers low-latency, high-performance bare metal servers. This solution is ideal for game developers and hosting services. Key aspects include:

-

Custom Infrastructure: Server specifications are tailored to the needs of different games.

-

Improved Performance: Individual containers deliver up to 30% greater compute and I/O performance.

-

Custom Connectivity: Solutions for low latency, especially in regions like Brazil.

-

DDoS Protection: Advanced technology to ensure uninterrupted gaming experiences.

Use Cases

DDoS Protection

Latitude.sh's DDoS protection is designed to secure dedicated servers from various types of network attacks. This service is crucial for businesses looking to safeguard their online presence. Features include:

-

Comprehensive Mitigation: Capacity to handle attacks of any size and form, including TCP, UDP, and ICMP floods.

-

Managed Defense Mechanisms: Full protection of layers 3, 4, and 7, with features like IP blocking and ACLs.

-

No Extra Cost: Included with all Latitude.sh servers, providing constant protection.

Containers

Latitude.sh's container solution emphasizes the advantages of running containers on bare metal. This use case is suitable for businesses seeking efficient container deployment. Highlights include:

-

No VMs Necessary: Reduces the noisy neighbor effect and overhead.

-

Increased Performance: Up to 30% more compute and I/O performance compared to VM-based environments.

-

Resource Utilization: Significantly higher resource utilization, reducing operational costs.

Streaming

The streaming solution from Latitude.sh is tailored for on-demand and live media streaming, requiring high performance and transit capacity. This use case is ideal for media companies and streaming services. Key features include:

-

High-Quality Network: Works with local Tier I transit providers for low-jitter, high throughput.

-

Origin and Edge Services: Fast content delivery with secure servers and direct connect options to public clouds and CDNs.

Features

Certainly, here's the article with all the main sections using H3 markdown (###) and the subsections appropriately indented:

Platform

Leverage the power and flexibility of a true bare metal cloud platform. Manage and access real-time information about your bare metal fleet through our API and dashboard.

Global edge locations

We oversee every aspect of our points of presence, ensuring you have a single partner for your global presence.

Carrier-grade network

We build and manage our network in all locations, giving us more control over its functionality.

Custom builds

Deploy one or a thousand fully automated bare metal servers specific to your needs.

24x7 support

We’re here to help with questions and implementation tips. Contact our support specialists any time of day.

Projects

Organize your resources into groups that make sense for you. Create projects to separate different workloads and environments.

User management

Add, edit, set permissions, and remove users in one click.

SAML Single Sign-On

Log in to Latitude.sh with your IAM. Our SAML integration supports the provisioning and de-provisioning of users.

Multi-factor Authentication

MFA is available as an additional security step for Email and OAuth-based logins.

Pay with crypto

Pay for your Latitude.sh usage with cryptocurrency.

Referral program

Share a unique referral link and receive rewards when referring a new user to Latitude.sh.

Hourly billing

With hourly billing you only pay for the resources you use for the period they were used.

Event logs

With Events you can easily audit everything that happens in your account, from new members being added to changes to your infrastructure resources.

Compute

Everything you love from the cloud, delivered on bare metal. Fully isolated, single-tenant dedicated servers, with no agents and no overhead, powered by automation you would only find in virtual environments.

15-second deploys

Deploy servers with the most popular Operating Systems in 15 seconds. All OSs that can't be deployed instantly are deployed in just 10 minutes.

Remote access

Securely connect to your server's IPMI for out-of-band management.

RAID

Deploy servers with RAID 0 or RAID 1 for improved data resilience.

User data

Run arbitrary commands on your server when it first boots. Use variables to pull device information dynamically with zero effort.

SSH Keys

Add any number of SSH keys and deploy servers that are secure by default.

Reinstall

Securely wipe all of your data and provision the same server with a fresh copy of the operating system of your choice.

Operating systems

Deploy any major operating system with one click, including Windows Server, Ubuntu, Debian, Flatcar, Rocky Linux, and others.

Rescue mode

Easily make changes and recover data if you lose SSH access to your server.

Custom images

Use iPXE scripts to quickly deploy the custom image of your choice.

Disk layout

You'll soon be able to select the disk layout that best serves your needs, including OS, swap, data, and custom partitions.

Out-of-band

Access your server's Serial Console over SSH if it becomes unreachable over SSH. Out-of-band is the easiest method to start a recovery process for your instance.

Hardware

Enterprise-grade hardware to run the most demanding workloads.

Single-tenant servers

Deploy single-tenant servers for more performance, control, and no risk of noisy neighbors.

SSD and NVMe disks

Choose from enterprise-class SSDs and NVMe flash drives.

GPU instances

Latitude.sh Accelerate provides powerful GPU instances to run the most demanding training, fine-tuning, and inference use cases.

Network

Reach millions of users around the globe with Latitude.sh’s global, carrier-grade network. Quickly create private networks, assign elastic IPs, and manage network resources from an easy-to-use dashboard and a powerful API.

20 TB bandwidth per server

You get 20 TB of free egress traffic per server every month automatically added to your monthly bandwidth quota.

Bandwidth pooling

Servers in the same region have their bandwidth quota pooled. This means you won’t worry about individual servers, and you’ll have a single place to manage everything related to your traffic.

Competitive overage rates

Going over your quota costs just $0.01 per GB. Overage is only charged when you exceed your quota after your bandwidth is pooled.

Programmable network

Use our API to create and manage your network resources programmatically.

Bring your own IP (BYOIP)

Use your own IPv4 and IPv6 prefixes on Latitude.sh servers to comply with your security and management policies.

Fully isolated IPv4 and IPv6

All servers come with a set of managed IPv4 and IPv6 addresses. These addresses are fully isolated from other customers.

DDoS protection

Unmetered, high-availability DDoS mitigation is available from our global scrubbing centers, equipped to handle any distributed attack.

Additional IPs

Add additional IPs to your projects and use them on any server in the same region.

Observability

Understand your individual and aggregated bandwidth usage with one click. Quickly understand your Latitude.sh environment.

Private networking

Quickly and easily create private networks to connect servers in the same region securely. Traffic from private networks is always free.

Bandwidth alerts

Get email notifications when your bandwidth consumption goes over 80% of your quota.

Elastic IPs

Create, assign and remap additional IPv4 and IPv6 addresses to any of your bare metal servers in seconds.

Developers

We prioritize the developer experience. Integrate faster and make changes to your environments with APIs that are powerful and easy to use.

API-first

Manage infrastructure resources programmatically with our fully documented RESTful API.

Terraform provider

Deploy and version bare metal servers and other infrastructure resources with Latitude.sh's Terraform Provider.

SDKs

Use our robust, documented SDKs to integrate with the Latitude.sh API.

API filtering and sorting

Filter API results with criteria including case sensitivity, prefixes, suffixes, and content. Sorting is also available for almost all attributes.

Click the button below & try Latitude.sh Risk Free

We recommend checking out these hosting sites instead! They make it easy to host and build websites all in one place! Get started with them today.

Liquid Web

Liquid Web Introduces GPU Hosting Services for High-Performance Computing

Liquid Web, a leading provider of managed hosting and cloud solutions, has launched GPU hosting services to address the growing needs of high-performance computing (HPC) applications. These services are designed for demanding tasks like artificial intelligence (AI), machine learning (ML), and rendering workloads, providing businesses with the computational power to manage data-intensive operations efficiently.

Overview of Liquid Web's GPU Hosting Services

Liquid Web's GPU hosting solutions are engineered for exceptional performance in resource-intensive applications. By incorporating NVIDIA's advanced GPUs, including models such as the L4 Ada 24GB, L40S Ada 48GB, and H100 NVL 94GB, these services cater to a broad spectrum of computational needs. Each server configuration is optimized to ensure smooth operation for AI/ML tasks, large-scale data processing, and complex rendering projects.

Key Features

-

High-Performance Hardware Servers are equipped with cutting-edge NVIDIA GPUs and AMD EPYC CPUs. For example:

- NVIDIA L4 Ada 24GB: Dual AMD EPYC 9124 CPUs (32 cores, 64 threads at 3.0 GHz, Turbo 3.7 GHz), 128 GB DDR5 memory, 1.92 TB NVMe RAID-1 storage.

-

Optimized Software Stack Includes the latest NVIDIA drivers, CUDA Toolkit, cuDNN for deep learning, and Docker with NVIDIA Container Toolkit, simplifying AI/ML workload deployment.

-

Scalability Flexible server configurations allow businesses to scale resources as their computational needs grow.

-

Compliance and Security Services adhere to strict compliance standards such as PCI, SOC, and HIPAA audits, ensuring sensitive data security.

Pricing

Liquid Web offers several GPU server configurations with corresponding pricing:

| GPU Model | Price per Month | CPU Configuration | Memory | Storage |

|---|---|---|---|---|

| NVIDIA L4 Ada 24GB | $880 | Dual AMD EPYC 9124 CPUs | 128 GB DDR5 | 1.92 TB NVMe RAID-1 |

| NVIDIA L40S Ada 48GB | $1,580 | Dual AMD EPYC 9124 CPUs | 256 GB DDR5 | 3.84 TB NVMe RAID-1 |

| NVIDIA H100 NVL 94GB | $3,780 | Dual AMD EPYC 9254 CPUs | 256 GB DDR5 | 3.84 TB NVMe RAID-1 |

| Dual NVIDIA H100 NVL 94GB | $6,460 | Dual AMD EPYC 9254 CPUs | 768 GB DDR5 | 7.68 TB NVMe RAID-1 |

Note: Due to high demand, delivery times for GPU servers range from 24 hours to two weeks.

Pros and Cons

Pros:

- High Performance: Advanced NVIDIA GPUs ensure exceptional processing speeds for AI/ML and rendering tasks.

- Comprehensive Software Stack: Pre-configured with essential tools and frameworks for AI/ML workloads.

- Scalability: Flexible configurations allow businesses to adjust resources as needed.

- Compliance: Adheres to industry standards for data security and regulatory compliance.

Cons:

- Cost: Premium hardware and services may be expensive for smaller businesses.

- Availability: High demand can result in longer delivery times.

Use Cases

Liquid Web GPU Hosting Use Cases:

- AI and Machine Learning: Accelerating model training and inference, hosting pre-trained large language models, and real-time AI services.

- Data Analytics: Speeding up big data processing and real-time analytics with GPU-optimized frameworks.

- Content Creation: Managing large-scale rendering and video editing tasks efficiently.

- Healthcare and Medical Imaging: Enhancing diagnostics, image analysis, and high-computation simulations.

- High-Performance Computing: Supporting scientific research, climate modeling, genomics, and complex engineering simulations.

Conclusion

Liquid Web's GPU hosting services deliver robust solutions for businesses requiring high-performance computing capabilities. With advanced hardware, a comprehensive software stack, and adherence to compliance standards, these services cater to a variety of data-intensive applications.

While the costs may pose a challenge for some, the unparalleled performance and scalability make Liquid Web's GPU hosting a compelling option for organizations leveraging GPU-accelerated computing.

OVHCloud

OVHcloud's cloud servers are built to handle large simultaneous workloads. The GPUs feature several instances of NVIDIA Tesla V100 graphics processors integrated to fulfill deep learning and artificial learning requirements.

Additionally, they aid in the development of graphics processing units (GPUs). With NVIDIA, OVH offers the greatest GPU-accelerated system for high-performance computation, AI, and deep, learning.

Use a full catalog to install and maintain GPU-accelerated containers most easily. There is no virtualization layer in the way, so you get the full power of one of the four cards.

OVHcloud's services and facilities are certified to ISO/IEC 27017, 27701, 27001, and 27018. Certifications show that the information security management system or ISMS of OVHcloud is in place to manage risks and vulnerabilities and to build a privacy information management system or PIMS.

The NVIDIA Tesla V100, on the other hand, provides a wide range of useful characteristics, including PCIe 32 Gbps, 16 GB HBM2 of memory, 900 GB/s bandwidth, single precision-14 teraFLOPs, double precision-7 teraFLOPs, and deep learning-112 teraFLOPs.

Paperspace

Paperspace stands out in the cloud GPU service market with its user-friendly approach, making advanced computing accessible to a broader audience.

User-Friendly Cloud GPU Service

It is especially popular among developers, data scientists, and AI enthusiasts for its straightforward setup and deployment of GPU-powered virtual machines.

Optimized for Machine Learning

Their services are optimized for machine learning and AI development, offering pre-installed and configured environments for various ML frameworks.

Tailored for Creative Professionals

Additionally, Paperspace provides solutions tailored to creative professionals, including graphic designers and video editors, thanks to their high-performance GPUs and rendering capabilities. The platform is also appreciated for its flexible pricing models, including per-minute billing, which makes it attractive for both small-scale users and larger enterprises.

Pros

-

User-friendly and easy setup.

-

Popular among developers, data scientists, and AI enthusiasts.

-

Pre-installed and configured environments for ML frameworks.

-

Suitable for creative professionals with high-performance GPUs.

-

Flexible pricing models, including per-minute billing.

Cons

- May not offer the same level of customization as some other providers.

Vultr

Vultr distinguishes itself in the cloud computing market with its emphasis on simplicity and performance. They offer a wide array of cloud services, including high-performance GPU instances.

Simple and Rapid Deployment

These services are particularly appealing to small and medium-sized businesses due to their ease of use, rapid deployment, and competitive pricing. Vultr's GPU offerings are well-suited for a variety of applications, including AI and machine learning, video processing, and gaming servers.

Global Network of Data Centers

Their global network of data centers helps in providing low-latency and reliable services across different geographies. Vultr also offers a straightforward and transparent pricing model, which helps businesses to predict and manage their cloud expenses effectively.

Pros

-

Simple and rapid deployment.

-

Competitive pricing.

-

Suitable for small and medium-sized businesses.

-

Good for AI, machine learning, video processing, and gaming.

-

Global network of data centers for low-latency services.

Cons

- May lack some advanced features offered by larger competitors.

Vast AI

Vast AI is a unique and innovative player in the cloud GPU market, offering a decentralized cloud computing platform.

Potential for Lower Costs

They connect clients with underutilized GPU resources from various sources, including both commercial providers and private individuals. This approach leads to potentially lower costs and a wide variety of available hardware. However, it can also result in more variability in terms of performance and reliability.

Cost-Effective GPU Workloads

Vast AI is particularly attractive for clients looking for cost-effective solutions for intermittent or less critical GPU workloads, such as experimental AI projects, small-scale data processing, or individual research purposes.

Pros

-

Potential for lower costs.

-

Wide variety of available hardware.

-

Cost-effective for intermittent or less critical GPU workloads.

-

Suitable for experimental AI projects and individual research.

Cons

- More variability in performance and reliability due to decentralized resources.

G Core

Gcore specializes in cloud and edge computing services, with a strong focus on solutions for the gaming and streaming industries.

High-Performance Computing

Their GPU cloud services are designed to handle high-performance computing tasks, offering significant computational power for graphic-intensive applications. Gcore is recognized for its ability to deliver scalable and robust infrastructure, which is crucial for MMO gaming, VR applications, and real-time video processing.

Global Content Delivery Network

They also provide global content delivery network (CDN) services, which complement their cloud offerings by ensuring high-speed data delivery and reduced latency for end-users across the globe.

Pros

-

High-performance computing for graphic-intensive applications.

-

Scalable and robust infrastructure.

-

Global content delivery network (CDN) services.

-

Suitable for MMO gaming, VR applications, and real-time video processing.

Cons

- May be less suitable for non-gaming or non-streaming workloads.

Lambda Labs

Lambda Labs is a company deeply focused on AI and machine learning, offering specialized GPU cloud instances for these purposes.

Pre-Configured Environments

They are well-known in the AI research community for providing pre-configured environments with popular AI frameworks, saving valuable setup time for data scientists and researchers. Lambda Labs’ offerings are optimized for deep learning, featuring high-end GPUs and large memory capacities.

Clients Include Academic Institutions

Their clients include academic institutions, AI startups, and large enterprises working on complex AI models and datasets. In addition to cloud services, Lambda Labs also provides dedicated hardware for AI research, further demonstrating their commitment to this field.

Pros

-

Pre-configured environments with popular AI frameworks.

-

Optimized for deep learning with high-end GPUs and large memory capacities.

-

Suitable for AI research, academic institutions, and startups.

Cons

- May have specialized focus and pricing geared towards AI research.

Genesis Cloud

Genesis Cloud provides GPU cloud solutions that strike a balance between affordability and performance.

Tailored for Specific User Groups

Their services are particularly tailored towards startups, small to medium-sized businesses, and academic researchers working in the fields of AI, machine learning, and data processing.

Simple and Intuitive Interface

Genesis Cloud offers a simple and intuitive interface, making it easy for users to deploy and manage their GPU resources.

Emphasis on Environmental Sustainability

Their pricing model is transparent and competitive, making it a cost-effective option for those who need high-performance computing capabilities without a large investment. They also emphasize environmental sustainability, using renewable energy sources to power their data centers.

Pros

-

Tailored towards startups, small to medium-sized businesses, and academic researchers.

-

Simple and intuitive interface.

-

Transparent and competitive pricing.

-

Emphasizes environmental sustainability with renewable energy sources.

Cons

- May not offer the same scale and range of services as larger providers.

Tensor Dock

Tensor Dock provides a wide range of GPUs from NVIDIA T4s to A100s, catering to various needs like machine learning, rendering, or other GPU-intensive tasks.

Superior Performance

**

Performance** Claims superior performance on the same GPU types compared to big clouds, with users like ELBO.ai and researchers utilizing their services for intensive AI tasks.

Cost-Effective Pricing

Pricing Known for industry-leading pricing, offering cost-effective solutions with a focus on cutting costs through custom-built servers.

Pros

-

Wide range of GPU options.

-

High-performance servers.

-

Competitive pricing.

Cons

- May not have the same brand recognition as larger cloud providers.

Microsoft Azure

Azure provides the N-Series Virtual Machines, leveraging NVIDIA GPUs for high-performance computing, suited for deep learning and simulations.

Enhanced AI Supercomputing

Performance Recently expanded their lineup with the NDm A100 v4 Series, featuring NVIDIA A100 Tensor Core 80GB GPUs, enhancing their AI supercomputing capabilities.

Competitive Pricing

Pricing Details not specified, but as a major provider, may have competitive yet varied pricing options.

Pros

-

Strong performance with latest NVIDIA GPUs.

-

Suited for demanding applications.

-

Expansive cloud infrastructure.

Cons

- Pricing and customization options might be complex for smaller users.

IBM Cloud

IBM Cloud offers NVIDIA GPUs, aiming to train enterprise-class foundation models via WatsonX services.

Flexible Server Selection

Performance Offers a flexible server-selection process and seamless integration with IBM Cloud architecture and applications.

Competitive Pricing

Pricing Unclear, but likely to be competitive in line with other major providers.

Pros

-

Innovative GPU infrastructure.

-

Flexible server selection.

-

Strong integration with IBM Cloud services.

Cons

- May not be as specialized in GPU services as dedicated providers.

FluidStack

FluidStack is a cloud computing service known for offering efficient and cost-effective GPU services. They cater to businesses and individuals requiring high computational power.

Ideal for Moderate Workloads

FluidStack is ideal for small to medium enterprises or individuals requiring affordable and reliable GPU services for moderate workloads.

Product Offerings

Products GPU Cloud Services** High-performance GPUs suitable for machine learning, video processing, and other intensive tasks. Cloud Rendering Specialized services for 3D rendering.

Pros

-

Cost-effective compared to many competitors.

-

Flexible and scalable solutions.

-

User-friendly interface and easy setup.

Cons

-

Limited global reach compared to larger providers.

-

Might not suit very high-end computational needs.

Leader GPU

Leader GPU is recognized for its cutting-edge technology and wide range of GPU services. They target professionals in data science, gaming, and AI.

High-End GPU Solutions

Leader GPU is suitable for businesses and professionals needing high-end, customizable GPU solutions, though at a higher cost.

Product Offerings

Products Diverse GPU Selection** A wide range of GPUs, including the latest models from Nvidia and AMD. Customizable Solutions Tailored services to meet specific client needs.

Pros

-

Offers some of the latest and most powerful GPUs.

-

High customization potential.

-

Strong technical support.

Cons

-

Can be more expensive than some competitors.

-

Might have a steeper learning curve for new users.

DataCrunch

DataCrunch is a growing name in cloud computing, focusing on providing affordable, scalable GPU services for startups and developers.

Affordable and Scalable

DataCrunch is an excellent choice for startups and individual developers who need affordable and scalable GPU services but don’t require the latest GPU models.

Product Offerings

Products GPU Instances** Affordable and scalable GPU instances for various computational needs. Data Science Focus Services tailored for machine learning and data analysis.

Pros

-

Very cost-effective, especially for startups and individual developers.

-

Easy to scale services based on demand.

-

Good customer support.

Cons

-

Limited options in terms of GPU models.

-

Not as well-known, which might affect trust for some users.

Google Cloud GPU"

Google Cloud is a prominent player in the cloud computing industry, and their GPU offerings are no exception.

Wide Range of GPU Types

They provide a wide range of GPU types, including NVIDIA GPUs, for various use cases like machine learning, scientific computing, and graphics rendering. Google Cloud GPU instances are known for their reliability, scalability, and integration with popular machine learning frameworks like TensorFlow.

Pricing Details

Pricing details can vary by type, region, and usage; check Google Cloud's website for specific information.

Pros

-

Extensive global presence.

-

Wide array of GPU types and configurations.

-

Strong integration with Google’s machine learning services.

-

Excellent support for machine learning workloads.

Cons

-

Pricing can be on the higher side for intensive GPU workloads.

-

Complex pricing structure may require careful cost management.

Amazon AWS

Amazon Web Services (AWS) is one of the largest and most established cloud computing providers globally.

Robust Selection of GPU Instances

AWS offers a robust selection of GPU instances, such as NVIDIA GPUs, AMD GPUs, and custom AWS Graviton2-based instances, catering to a broad range of workloads.

Pricing Details

AWS GPU instance pricing varies by type, region, and usage; check AWS website for specific pricing information.

Pros

-

Extensive global coverage.

-

Wide variety of GPU instances available.

-

Strong ecosystem of services and resources.

-

Excellent documentation and support.

Cons

-

Pricing can be complex and may require cost monitoring.

-

Costs can escalate quickly for resource-intensive workloads.

Linode

About Linode

Whether you're managing enterprise infrastructure or working on a personal project, get the price, support, and scale you require to advance your concepts.

We make it simple to manage your workloads in the cloud with flat pricing across all global data centers, an intuitive Cloud Manager, a fully featured API, best-in-class documentation, and award-winning support.

With scalable, easy-to-use, economical, and accessible Linux cloud solutions and services, Linode fosters innovation. Developers and businesses can more quickly and affordably create, deploy, protect, and scale applications from the cloud to the edge on the largest dispersed network in the world thanks to our products, services, and workforce.

Reasons Developers Choose Linode

The developer community respects our dedication to them. We don't create goods that are in direct opposition to those that they have created.

Developers value our steadfast commitment to openness. We don't hide the intricacy of our prices or offerings.

Our sole focus on infrastructure is well-liked by developers. We've repeatedly shown that a successful business can be created around the requirements of its clients.

a cloud provider that developers can rely on since we offer them an alternative to the pricey, complicated, and cutthroat options available on the market right now.

Linode Product Offering:

-

Dedicated CPU

-

Shared CPU

-

High Memory

-

GPU

-

Kubernetes

-

Block Storage

-

Object Storage

-

Backups

-

Managed Databases

-

Cloud Firewall

-

DDoS Protection

-

DNS Manager

-

NodeBalancers

-

VLAN

-

Managed

-

Professional Services

-

Cloud Manager

-

API

-

CLI

-

Terraform Provider

-

Ansible Collection

Runpod

Run.ai

The Atlas platform from Run:ai enables users to virtualize and orchestrate their AI workloads, with a focus on optimizing GPU resources whether on-premises or in the cloud. It abstracts all of this hardware away, allowing developers to interact with the pooled resources using standard tools like Jupyter notebooks and IT teams to gain a better understanding of how these resources are used.

Based in Tel Aviv Run:ai, a startup that helps developers and operations teams manage and optimize their AI infrastructure, announced today a $75 million Series C funding round led by Tiger Global Management and Insight Partners, who also led the company's $30 million Series B round in 2021. This round also included previous investors TLV Partners and S Capital VC, bringing Run:total ai's funding to $118 million.

The new round comes at a time when the company is rapidly expanding. According to the company, its annual recurring revenue has increased 9x in the last year, while its staff has more than tripled. Run:ai CEO Omri Geller attributes this to a variety of factors, including the company's ability to build a global partner network to accelerate growth and the overall enterprise momentum for the technology.

"As organizations move beyond the incubation stage and begin scaling their AI initiatives, they are unable to meet the expected rate of AI innovation due to severe challenges managing their AI infrastructure," he explained.

CoreWeave

CoreWeave can be used to deploy artificial intelligence applications and machine learning models by data scientists, machine learning engineers, and software engineers, to name a few roles. Clients can also manage their complex AI solutions powered by GPUs with the help of CoreWeave's specialized DevOps expertise, according to the company.

CoreWeave, a specialized cloud services provider for GPU-based workloads, announced a collaboration with GPU giant Nvidia to help businesses scale their infrastructure, reduce costs, and improve efficiencies.

This relatively new cloud services provider provides on-demand computing resources across several tech verticals and has stated that it will continue to improve performance with the help of a $50 million round of funding from Magnetar Capital.